Intro

My younger brother once told me his favorite photo I had ever taken was of an abandoned Mercedes in a forest, surrounded by tall grass. Maybe he sensed something simple and true in it.

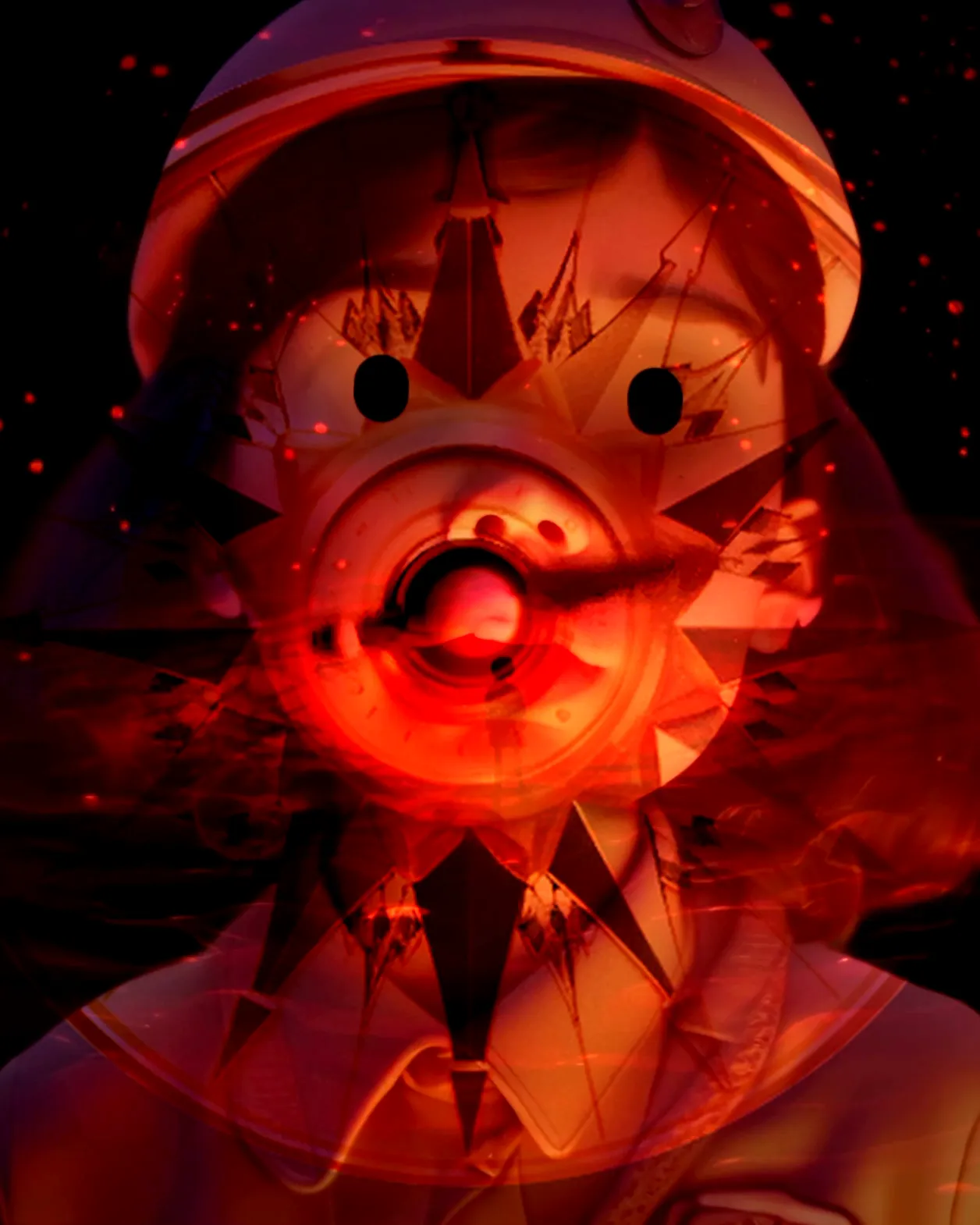

Lately, I’ve been deep into experimentation with ComfyUI. Exploring workflows, making mistakes and slowly learning a new tool.

This small series was built using FLUX1.DEV inside ComfyUI, paired with a few custom LoRAs to push toward a gritty, analog 35mm look.

ComfyUI is a open source software for the visual AI space and can run fully locally on your computer. It is different from the big plug-and-play AI platforms. It’s node-based and open, more like a modular synthesizer than an app. You’re not clicking presets. You’re building a visual process. I’d definitely recommend trying it out yourself.

Prompt Example

"d1g1cam, amateur photo, low-lit, overexposure, Low-resolution photo, shot on a mobile phone, noticeable noise in dark areas. (Trigger words for LoRa)

A poison green Lamborghini sits abandoned in the middle of a dense, overgrown forest. The car is nearly swallowed by wild vegetation — very tall grasses rise halfway up the doors, and creeping vines curl over the headlights and rear spoiler. The vivid green paint pops unnaturally against the earthy tones of the forest, but is streaked with dirt and leaf debris. The windshield is fogged, and small plants grow from cracks in the soil beneath the tires. Trees surround the scene tightly, with filtered light casting uneven shadows. The photo is messy and raw, with heavy digital grain, clipped highlights, and soft focus — like a forgotten snapshot taken on a cheap mobile phone, lost in the depths of the camera roll.

"

Detail Daemon

⬑ research / AI Oldtimer Flux1.dev

Helpful Resources

- ComfyUI https://www.comfy.org/

- Model Flux1.Dev https://huggingface.co/black-forest-labs/FLUX.1-dev

- Flux1.Dev LoRA: https://civitai.com/models/978314/ultrareal-fine-tune

- Upscale Custom Node Detail Daemon : https://github.com/Jonseed/ComfyUI-Detail-Daemon